This template leverages Edgio’s cutting-edge delivery network to scaffold a LangChain.js + Next.js starter app, enhancing its performance and scalability while showcasing several use cases for different LangChain modules.

With Edgio, the app benefits from faster load times, improved user experience, and seamless global deployment capabilities, making it an ideal platform for developing and deploying AI-powered applications efficiently at scale.

Prerequisites

Setup requires:

- An Edgio account. Sign up for free.

- An Edgio property. Learn how to create a property.

- Node.js. View supported versions and installation steps.

- Edgio CLI.

Install the Edgio CLI

If you have not already done so, install the Edgio CLI.

Bash

1npm i -g @edgio/cli@latest

Getting Started

Clone the

edgio-v7-ai-example repo and change to the langchain directory.Bash

1git clone https://github.com/edgio-docs/edgio-v7-ai-example.git2cd langchain

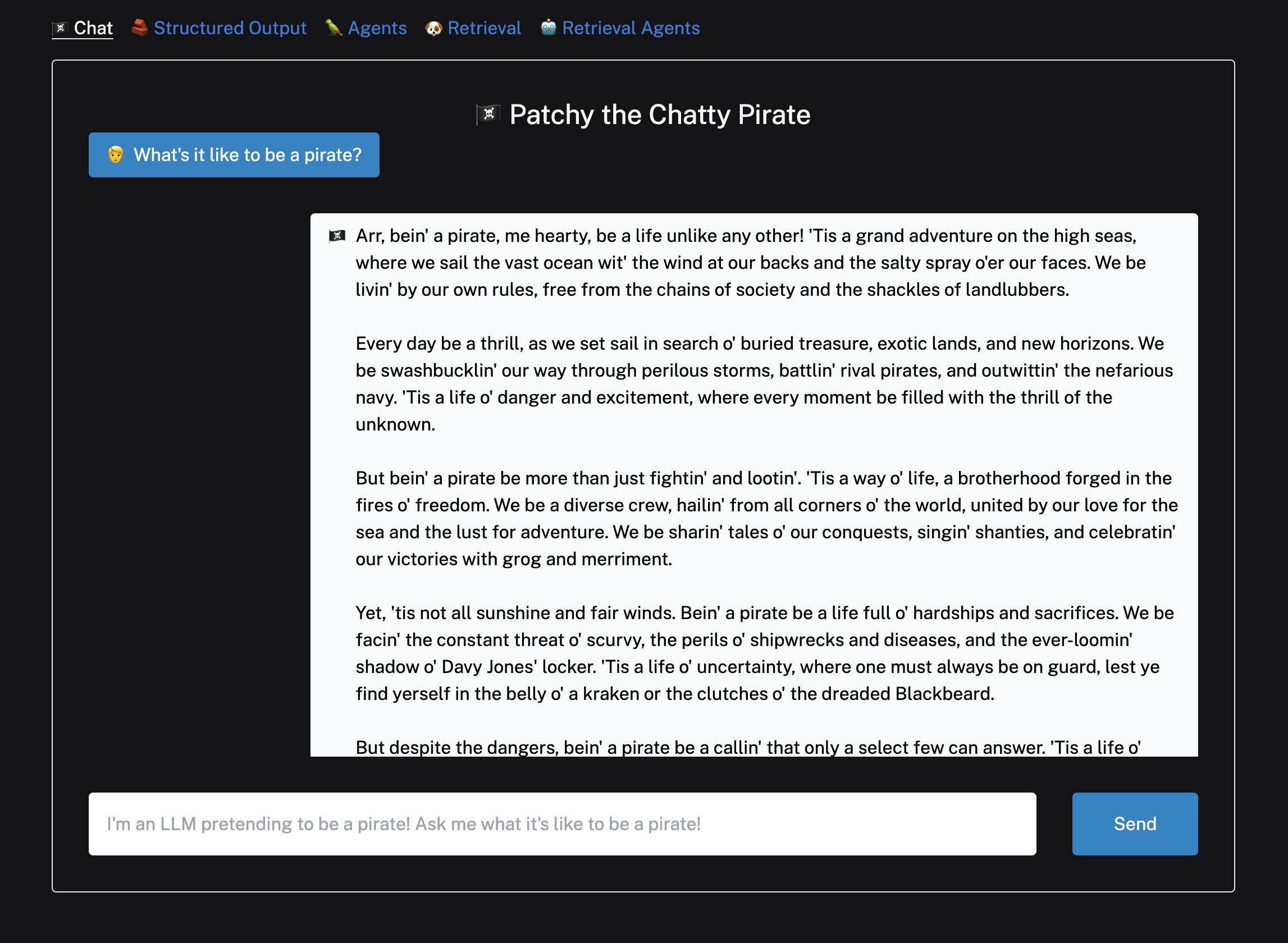

Chat

Set up environment variables in a

.env.local file by copying the .env.example file to .env.local.Bash

1cp .env.example .env.local

To start with the basic examples, you’ll just need to add your OpenAI API key.

Bash

1OPENAI_API_KEY="YOUR_API_KEY"

Install required packages and run the development server.

Bash

1npm i2npm run dev

Open localhost:3000 with your browser to ask the bot something and you’ll see a streamed response. You can visit

app/page.tsx if you would like to start customizing your chat interface.

The backend logic can be found in

app/api/chat/route.ts. From here, you can change the prompt which is set to the TEMPLATE variable and the model that is initialized with the modelName variable passed to the ChatOpenAI client.TypeScriptapp/api/chat/route.ts

1import {NextRequest, NextResponse} from 'next/server';2import {Message as VercelChatMessage, StreamingTextResponse} from 'ai';3import {ChatOpenAI} from '@langchain/openai';4import {PromptTemplate} from '@langchain/core/prompts';5import {HttpResponseOutputParser} from 'langchain/output_parsers';67export const runtime = 'edge';89const formatMessage = (message: VercelChatMessage) => {10 return `${message.role}: ${message.content}`;11};1213const TEMPLATE = `You are a pirate named Patchy. All responses must be extremely verbose and in pirate dialect.1415Current conversation:16{chat_history}1718User: {input}19AI:`;2021export async function POST(req: NextRequest) {22 try {23 const body = await req.json();24 const messages = body.messages ?? [];25 const formattedPreviousMessages = messages.slice(0, -1).map(formatMessage);26 const currentMessageContent = messages[messages.length - 1].content;27 const prompt = PromptTemplate.fromTemplate(TEMPLATE);2829 const model = new ChatOpenAI({30 temperature: 0.8,31 modelName: 'gpt-3.5-turbo-1106',32 });3334 const outputParser = new HttpResponseOutputParser();3536 const chain = prompt.pipe(model).pipe(outputParser);3738 const stream = await chain.stream({39 chat_history: formattedPreviousMessages.join('\n'),40 input: currentMessageContent,41 });4243 return new StreamingTextResponse(stream);44 } catch (e: any) {45 return NextResponse.json({error: e.message}, {status: 500});46 }47}

The

POST handler initializes and calls a simple chain with a prompt, chat model, and output parser. See the LangChain docs for more information.- Chat models stream message chunks rather than bytes, so output parsing is handling serialization and byte-encoding by initializing

HttpResponseOutputParser()tooutputParser. - To select a different model, the client would be initialized like so (see a full list of supported models here):

TypeScript

1import {ChatAnthropic} from 'langchain/chat_models/anthropic';2const model = new ChatAnthropic({});

Agents and Retrieval

To try out the Agent or Retrieval examples, follow the instructions in the Edgio AI repository.

Deployment

Install the Edgio CLI if you haven’t already. Run your development server with

edgio dev:Bash

1edgio dev

Deploy your project with

edgio deploy:Bash

1edgio deploy